While it doesn’t seem like a big deal, it was quite hard to implement a transaction history. Here is the reason as to why and how we managed to do it.

What a transaction history does is most certainly obvious. Therefore, we will rather share some insight into the process of problem solving when you happen to be the first to deal with such a situation.

Unfortunately, it is not as easy as just collecting and saving the game operations locally. What if a gamer decides to play on a different computer? What happens in case of a resync started by one of our gamers or we have to force a resync to implement a brand new feature?

In that case the collected data would be destroyed instantly because the client would download the preloaded blockchain in which only the final game state is precomputed. Even if we opened the precomputed game state to include the needed data, sooner or later it would end in disaster! Collecting the necessary data of just 500 players would cause the preloaded blockchain service to grow by about 10 MB per month. This would lead to enormous data packets after just a short period of our game running.

Besides, our balance tracker service which is very useful to reduce the syncing time only transmits transactions which contain unspent outputs. This means that using our service simply on the basis of its data would result in an inconsistent transaction history. Nevertheless, we did not want to give this feature just to people who are willing to sync the wallet of their own accord to include all transactions!

This is why we figured out a solution which fulfills all our needs.

We readjusted our services and searched for a suitable area for transaction tracking. Finding this area was especially tricky and the most difficult part of the creation of the transaction history.

Only specific locations in the flow of data result in a consistent data set. These are situated in the area where the data is extracted directly from the blockchain. Unfortunately, there is one important thing missing in those suitable areas: Information regarding the current version.

This led to a major problem: If the preloaded game state is used when starting the game, you automatically end up with the latest version. At that moment, data related to a prior game version can no longer be interpreted. The solution was a self-computed resync which does not have this problem. The client analyzes the relevant data in chronological order and updates the game version whenever it has to.

The other problem was related to forks which caused some transactions to be displayed twice in the transaction history. It was possible, at least in theory, that a not completed transaction was displayed in case someone would try to spend the same coin twice.

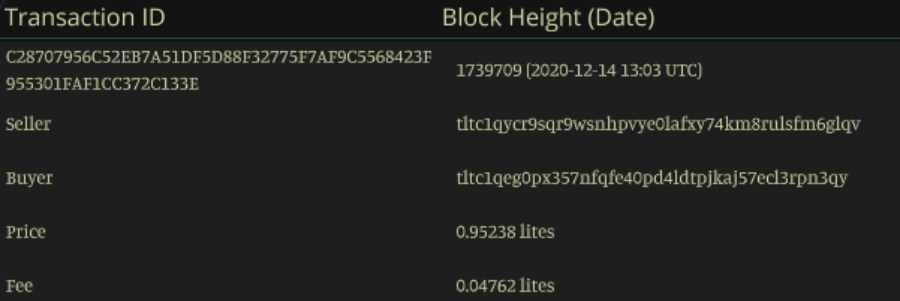

To solve this issue was not as easy as you might expect. The block explorer we use, ElectrumX, has the needed feature to compute the history but has a soft limitation regarding the size of the result. After we got rid of this limitation, we were confronted by the next problem. The output of the explorer only contains transaction IDs and the block height but not the transaction data we needed, which is why every single transaction would have to be checked with the block explorer.

Because every game operation equals a transaction, the number of transactions can get enormous in a short period of time. For instance, one of our team members created 16.000 transactions while the game was still in the testing phase. To compute such an account would take the block explorer about 20 seconds. If more than one complex account should be computed at the same moment the waiting time would reach an unacceptable length.

To solve this, we created a database which tracks every transaction ID and the corresponding transaction data. Whenever data is requested from ElectrumX the transaction IDs are compared with the cache. After that, only the data of transactions which is not already in the cache is downloaded.

In addition, we decided to compress the data by 80 percent. In the end, we were able to reduce the processing time to 300 milliseconds on average.